“Mind Reading” Research: Identifying Emotions Based on Brain Activity

Scientists at Carnegie Mellon University (Dietrich College of Humanities and Social Sciences), a world leader in brain and behavioral sciences, have identified which emotion a person is experiencing based on brain activity. The study was recently published in PLOS ONE and it combines functional magnetic resonance imaging (fMRI) and machine learning to measure brain signals in order to accurately read emotions in individuals.

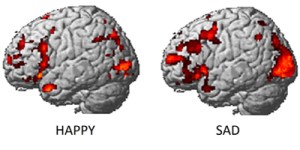

The brain image on the left shows what happy looks like. The brain image on the right shows what sad looks like.

The findings illustrate how the brain categorizes feelings, giving researchers the first reliable process to analyze emotions. As people often cannot report their feelings (as many emotional responses may not be consciously experienced) and neuromarketers try to investigate them by using technology, over the last years there have been many discussions about the lack of reliable methods to evaluate emotions

Karim Kassam (Assistant Professor of Social and Decision Sciences and lead author of the study) says that “this research introduces a new method with potential to identify emotions without relying on people’s ability to self-report. It could be used to assess an individual’s emotional response to almost any kind of stimulus, for example, a flag, a brand name or a political candidate.”

One challenge for the research team was find a way to repeatedly and reliably evoke different emotional states from the participants. Traditional approaches, such as showing subjects emotion-inducing film clips, would likely have been unsuccessful because the impact of film clips diminishes with repeated display. The researchers solved the problem by recruiting actors from CMU’s School of Drama.

For the study, 10 actors were scanned at CMU’s Scientific Imaging & Brain Research Center while viewing the words of nine emotions: anger, disgust, envy, fear, happiness, lust, pride, sadness and shame. While inside the fMRI scanner, the actors were instructed to enter each of these emotional states multiple times, in random order. A second phase of the study presented participants with pictures of neutral and disgusting photos that they had not seen before. The computer model, constructed from using statistical information to analyze the fMRI activation patterns gathered for 18 emotional words, had learned the emotion patterns from self-induced emotions. It was able to correctly identify the emotional content of photos being viewed using the brain activity of the viewers. To identify emotions within the brain, the researchers first used the participants’ neural activation patterns in early scans to identify the emotions experienced by the same participants in later scans. Next, the team took the machine learning analysis of the self-induced emotions to guess which emotion the subjects were experiencing when they were exposed to the disgusting photographs. Finally, they applied machine learning analysis of neural activation patterns from all but one of the participants to predict the emotions experienced by the hold-out participant. When they took new individuals, put them in the scanner and exposed them to an emotional stimulus, the model achieved a rank accuracy of 0.71 (how accurately they could identify their emotional reaction).

So people tend to neurally encode emotions in similar ways. Emotion signatures aren’t limited to specific brain regions, such as the amygdala, but produce characteristic patterns throughout a number of brain regions. The research team also found that while on average the model ranked the correct emotion highest among its guesses, it was best at identifying happiness and least accurate in identifying envy. It rarely confused positive and negative emotions, suggesting that these have distinct neural signatures. And, it was least likely to misidentify lust as any other emotion, suggesting that lust produces a pattern of neural activity that is distinct from all other emotional experiences.

You can watch below Karim Kassam discuss the research: